As generative AI tools become more common in daily life, helping with emails, searches, and homework, the hidden cost of carbon emissions is rising.

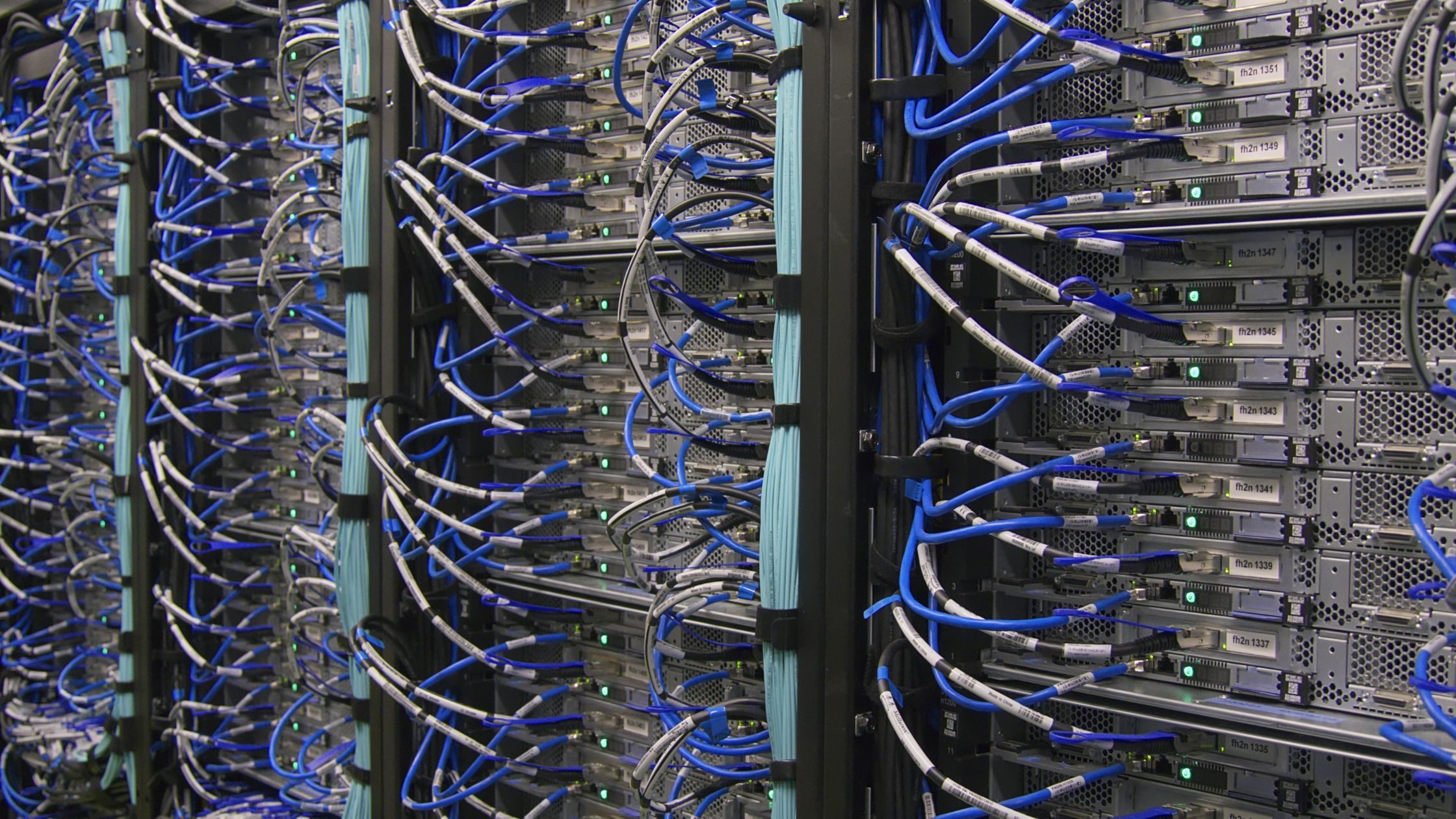

The U.S. Department of Energy says that AI-driven demand could make data centers use up to 12% of the country’s electricity supply by 2028, up from just 4.4% today. More fossil fuels, especially coal and natural gas, may need to be burned to meet this growing energy demand. This will release more greenhouse gases into the atmosphere.

But not all AI chatbots are bad for the environment.

Being smarter doesn’t always mean being greener.

A recent study in Frontiers in Communication looked at 14 open-source large language models (LLMs) and tested each one on 500 multiple-choice and 500 free-response questions from five different academic subjects.

Main results:

- Bigger models usually gave more accurate answers, but they also used a lot more energy.

- Some models released up to four times as much carbon dioxide per answer as others, but they didn’t get much better at being correct.

- Questions that were more about logic, like abstract algebra, needed more math and made more CO₂ than questions that were more about facts, like history.

Maximilian Dauner, the lead researcher from Munich University of Applied Sciences, said that it is important to use the right model for the job. He said, “We don’t always need the biggest, most heavily trained model to answer simple questions.”

More energy is used by reasoning models, but not always more accurately.

Models that explain how they think, also known as reasoning models, tend to give longer answers and use more energy. But their better performance is often only slight.

For instance:

- DeepSeek-R1, one of the biggest models tested, put out the most CO₂ per response, but its answers were just as accurate as those of smaller, more efficient models.

- Cogito 70B also used a lot of energy without making big improvements in accuracy.

The location of AI’s carbon footprint matters.

The model’s location is also important. The study used global averages for CO₂, but emissions differ a lot depending on the local energy grid.

Jesse Dodge, a senior researcher at the Allen Institute for AI, said that training an AI model in the central U.S. can release three times more CO₂ than in Norway because of the differences in the availability of renewable energy.

Not the subject, but the length of the output, causes emissions.

Sasha Luccioni, Hugging Face’s climate lead, said that the length of the input and output, not the subject matter, is what uses energy. In her earlier research analyzing 88 LLMs, she discovered:

- Text generation tasks, such as chatbots, use ten times more energy than simpler AI tasks, such as sorting emails.

- Models that were bigger always had higher emissions.

Luccioni said that most people don’t need to use LLMs for everyday tasks: “We’re reinventing the wheel.” Use a calculator as a calculator.

The Bottom Line: Use AI Sparingly

Generative AI models are strong, but not always needed. For basic information retrieval, traditional search engines or simpler tools are often more accurate and use less energy.

As AI tools get bigger, developers and users will have to think about performance and environmental cost. They will have to make smart choices based on speed and long-term use.